In a real-world scenario your apps (hosted on a Docker container) need to be accessed from the outside world, may need to communicate to other containers. Think like you have multiple microservices hosted on different containers for obvious reasons, now some microservices may need to talk to each other. You may also want to log/write some data to external storage. In this article, let's see how to do this.

Accessing containers from outside

By default, the Docker containers are allowed to have outgoing traffic, but we need to enable incoming traffic. Docker gives a very elegant way of enabling a container to expose its port by just having -p or --publish as options to expose the port of the container. Let us see how it works in reality. Let's run an Nginx service and make it accessible on port 8080. First thing first, download the image.

Let's create a container from the downloaded nginx image, and expose port 80 by mapping it to 8080. To do so run the below command.

docker container run -d -p 8080:80 --name nginx 605c77e624dd

Now if we browse localhost:8080, we will get the default page of nginx service.

Let us try to understand the command we just ran to create and allow the inbound traffic to access the default page. When you run a Docker container with -p <host_port>: <container_port> , the Docker engine maps these ports and forwards the packets it receives on the Docker host port to the container's port.

You can check the port mapping of any container by using the command

docker container port <docker_id>

Attaching containers to host network

By default, all the containers created, get the IP addresses from a default bridge network docker0, however it can be changed to get the IP address directly from the host network. Let us compare one with default configurations and one with host network. To do so first download the alpine image (alpine is a very lightweight Linux image).

And let us create a container with the default configuration and check its IP address details.

Let us create a container by attaching it to the host network and to do so just add this option --net=host

As it can be seen in the second case, the docker container has full access to the host network stack.

Containers with no network

Docker supports three kinds of networks, bridge (its the default), host, and none. [docket network ls]. None network is useful when you don't want the container to have any networking capabilities.

To do so run the container using --net none argument.

For instance docker container run --rm --net=none alpine ip address

Sharing IP address with other containers

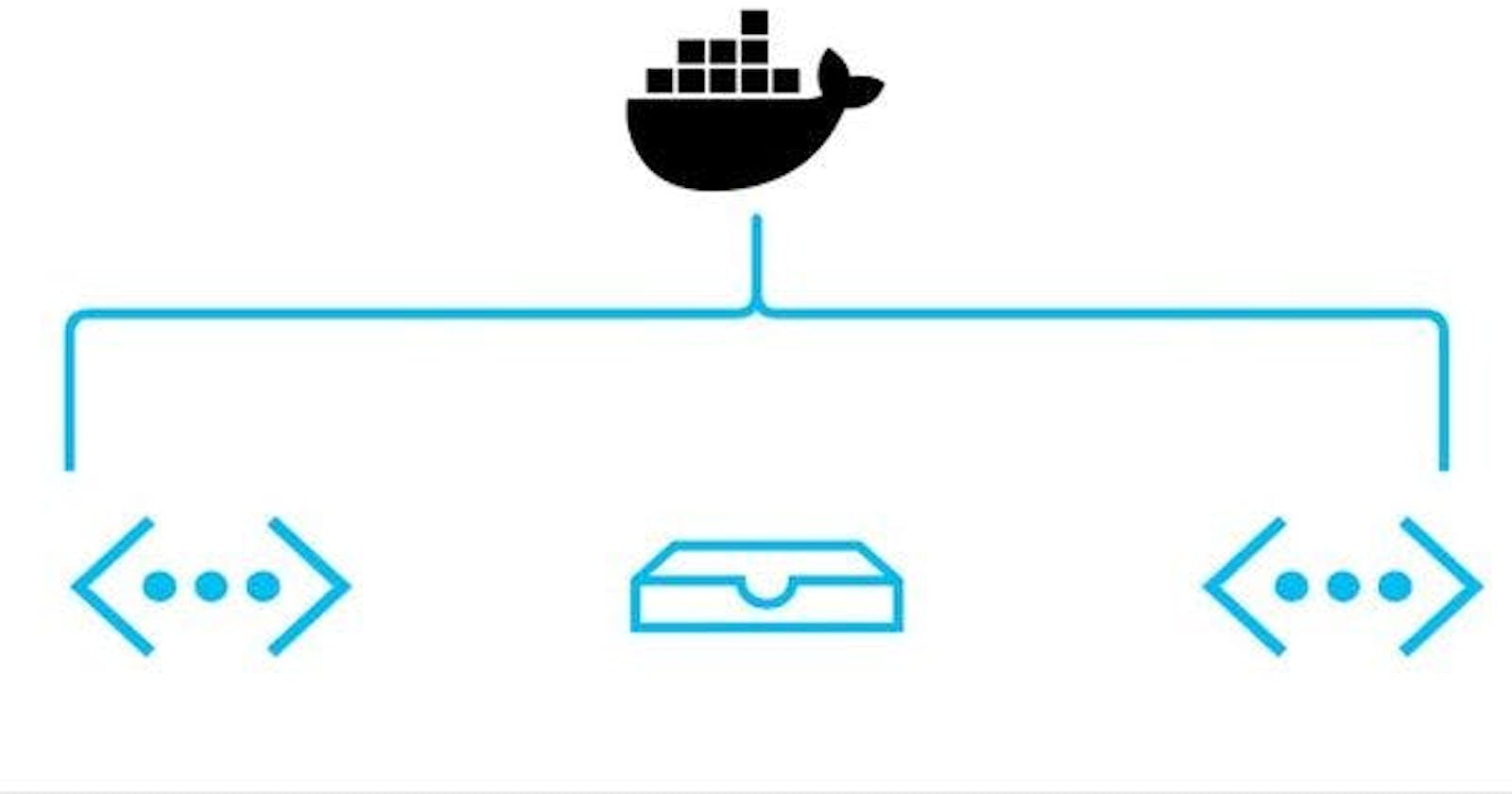

What if you want many apps to have the same IP address, there are few options available in Docker, one of them is to have your apps in the same container but isn't it against the principle of containerization architecture. The better option is to have a container for IP address, and let it share its IP address with other containers, as depicted in the below image.

Firstly let us launch a container in the background using the below command.

docker container run -itd --name=ipcontainer alpine

And let's see its IP address.

docker container exec ipcontainer ip address

Now, let us run a container by attaching it to the above-mentioned container so that it has the same IP address, by adding the option --net container:ipcontainer

So this is what the command will look like.

docker container run --rm --net container:ipcontainer alpine ip addr

When containers share the same IP address, it's recommended to have the original container in the running state until the other containers are running, otherwise, it will put other containers in an unstable state. Also, containers in Kubernetes use this trick to share IP addresses. You can also create your own network bridge and do load balancing in containers. To learn more about the Docker network, you can always refer to the official document Docker Documentation | Docker Documentation